What is an Oscilloscope?

An oscilloscope (or just “scope” if you’re far enough removed from microscopes for it to not be confusing) is a tool that measures and reports voltage over time (a “signal”). Modern oscilloscopes are digital and will show the signal (or various signals at the same time) on a LCD screen. There’s a host of buttons that control which part of the signal is shown and when (and/or how often) the signaled should be “sampled.” It’s a very useful tool that lets you verify that the signals on your board are actually doing what they’re supposed to do, without degrading too much in the process.

Let’s get into it below.

So – what makes a scope good?

It should go without saying an oscilloscope should be accurate and should introduce as little noise as possible. You want to make sure that what you’re seeing on the screen is what is actually going on where you’re probing. This is a simple concept to understand, but it’s easier said than done.

An oscilloscope should also be as generic as possible – ideally, you want an oscilloscope that is a “one size fits all solution”; you don’t want to reach for different oscilloscopes when working on different parts of your project. What this means practically is that you want to be able to “zoom in and out” of the signal in both the vertical and horizontal axis (as well as shift it up and down and left and right). This will make your oscilloscope be able to display the widest variety of signals – small or large (y-axis), fast or slow (x-axis). It will also allow the oscilloscope to look at a single signal in various ways (zoomed-out on the x-axis for long-term, steady state behavior or zoomed-in on the x-axis for short-term “transient” behavior, such as spikes or noise, for example).

The vertical axis represent voltage, so to “zoom in” you need to amplify (increase the amplitude of) the signal and to “zoom out” you need to attenuate (decrease the amplitude of) the signal. This is done in what we call the “analog front-end” of the signal. The horizontal axis represents time, so to “zoom in” we’ll need to take samples more frequently and to “zoom out” we’ll add more time between the samples. Here’s a quick example:

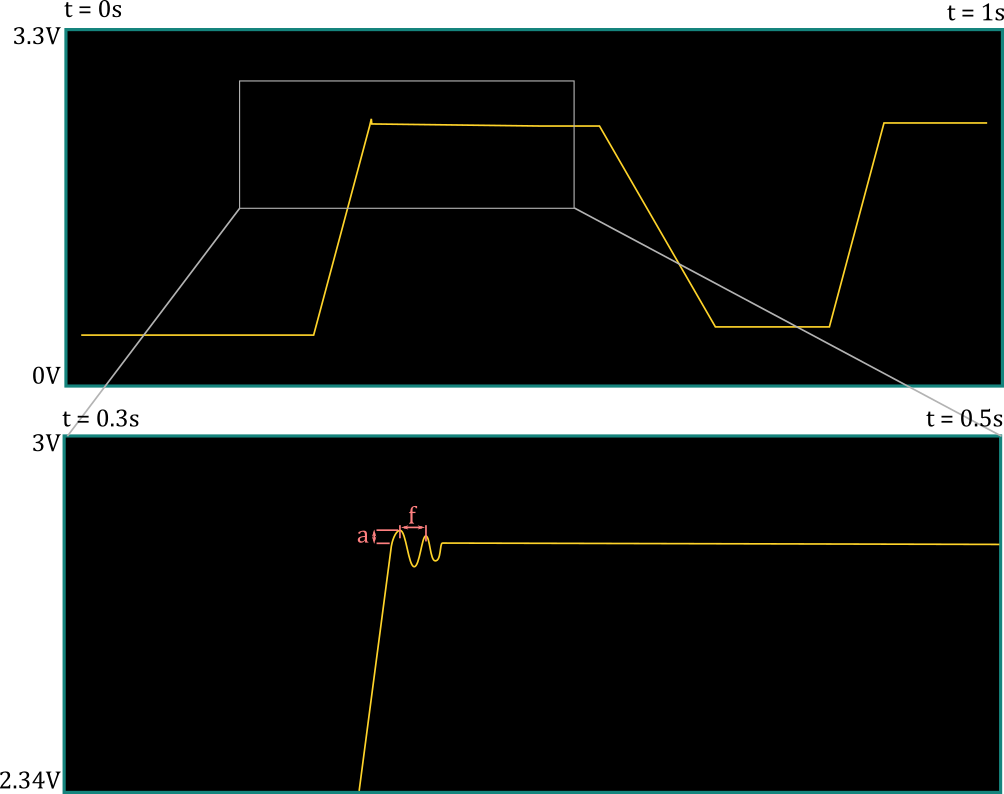

Example of zooming in to a signal on an oscillocope

What is actually happening when we zoom in like in the above example? We can say that we adjust the “screen” so that it goes from showing what it was previously to being filled by only what’s in the grey box. That is, the screen is now limited to showing a “subset” of what it showed before – instead of voltage going from 0V (bottom of the screen) to 3.3V (top of the screen). now it only goes from 2.34V to 3V. Likewise, the time scale has been reduced – now it only goes from 0.3s on the far left of the screen to 0.5s on the far right of the screen. This sounds a lot like a “digital zoom” on a camera, but if we take a closer look at the second image, we see that we didn’t simply reduce the amount of information – we now have more information about the little “spike” we saw in the zoomed-out version. What before looked like a simple “spike” now looks like oscillatory behavior with an overshoot of a and a frequency of f.

To understand what’s going on, we have to know what’s going on in the guts of the scope to show you this signal on the display. Here’s a simplified block diagram of the hardware that obtains the signal to be displayed (we’ll expand on this later):

The microcontroller (MCU) is the brains of the operation – it runs the code that we write and tells the display what pixel goes where (and in what color). A microcontroller by itself, however, does not understand an analog signal; it works purely in the digital domain – ones and zeroes, or 3.3V (or other IO voltage) and GND. The MCU also doesn’t have a sense of “analog time” – it’s fed a clock from a crystal oscillator or other source, and runs an instruction (think of a line of code) every tick (this is again an oversimplification, but it’s a good enough description for our purposes).

The MCU needs a series of digital signals that somehow map to the original analog signal. This is the job of the ADC (Analog to Digital Converter), which takes in an analog signal at the input as well as a digital clock and spits out the “digital equivalent” of that signal at the output whenever the clock goes from low to high (or sometimes high to low). The voltage range at the input of the ADC, however, is limited within a certain range, typically (but not always) between ground and the ADC’s supply (3.3V or 5V, for example).

This is why we have an analog front end – it needs to process the analog signal so that it fits within the dynamic range of the ADC (the min and max voltages at the input that it can understand). It’ll need to make the signal bigger or smaller (scale the amplitude of the signal linearly) as well as shift it up and down so that the user’s desired range/window is within the ADC’s dynamic range. This amplitude factor (gain) and offset will need to come from the MCU so that the user can configure how the signal is seen on the screen.

We need something else between the MCU and the ADC. The ADC will be sampling the signals constantly; in order to support a wider range of “zooming” on the signal, we will sometimes need to sample the signal very often, if the user wants to zoom way in on the signal. For instance, if the user wants to the screen’s width to be 1us, the screen is 300 pixels wide, and we want each pixel to represent a sample, we’ll need to take a sample every 1us / (300 px/sample) = 3.33ns, which is a sampling frequency of 1/3.33ns or 300MHz (that’s very fast)! Realistically, we wouldn’t do a sample per pixel – we can start with a sample every ten pixels and connect them with a line – but you get the point. We’ll see that the ADC cost starts to increase astronomically with the maximum sampling frequency, so that will be the limiting factor here – probably somewhere around 50-60 MHz; I’ll show you the options and we’ll do the analysis together. In any case, the MCU cannot handle 50 million samples a second. Let’s say a typical embedded processor runs at a clock frequency of 200MHz – that’s 4 cycles per sample if we’re sampling at 50MHz – there’s no way it’ll be able to do the triggering, processing, displaying instructions, and anything else it needs to do in those four cycles of “free time” before the next sample comes in!

What we need to think about here is that, in practice, most samples a scope takes are thrown away and never displayed. That makes sense – there’s no way a human brain would be able to processes 50 million (distinct) samples a second (although you would get some benefit from displaying 1000 samples that were an average of 50 million – but let’s put that aside for now). The scope setting that determines which samples are thrown away and which are shown on the screen is called the trigger. Triggering circuitry can be arbitrarily complex, but at its simplest the user configures what threshold voltage they’d like to see, and whether they’d like to see the signal cross that voltage from below (rising edge), above (falling edge), or both. The scope then displays samples around that triggering voltage – typically some samples that happened before it and some that happened after (the exact delay between the trigger and the samples shown on the screen can also be configured by the user). The trigger can also be set to “auto” – which means the scope will “continuously” show samples – but realistically, this doesn’t mean you see all the samples. In fact, what most scopes are doing is waiting for a trigger event to happen, and if they don’t see one, then they will trigger themselves after a preset “timeout”, which makes it look like the scope is running continuously.

What this means in practice is that the MCU doesn’t need to look at every sample from the ADC – what we should try to have instead is some hardware that looks at every sample and figures out if a trigger event has happened. This same hardware can be responsible for saving the samples to memory (remember – both before and after the trigger, so we need to be continuously storing the samples, not just after we see a trigger) and managing the memory so we don’t overflow it with samples that we’re never going to need. Once the trigger has happened, our circuitry can let the MCU know that it needs to get the samples and can tell it where exactly in the memory to look for the samples. The MCU can then take its time, read the samples out of memory, and display them.

Here’s what we need, then, to make an oscilloscope that is as generic as possible – each of these will warrant several posts of their own:

- An analog front end with a variety of MCU-controllable settings to both scale the signal as well as offset it

- An ADC capable of sampling the signal as fast as possible without breaking the bank

- A flexible and MCU-controllable triggering and logic circuit to process and store the “interesting” samples coming out of the ADC.

- A good amount of memory for the samples, so that we can support a delayed version of the signal (make the signal move left or right on the scope screen)

- A decently fast MCU and a good display to make sense of the samples

Don’t worry if there are parts of the above concepts that seem too complicated to grasp now – we’ll delve into each of these elements in more detail individually (and if you’re angry that I got a lot of stuff wrong or oversimplified, I hear you – I’ll be learning more throughout this process too). What I’m hoping you’ll get from this, however, is that hardware design is often fitting blocks together to get what you want. You start with what you know (“I need an MCU”), figure out what it can’t do (analog signals), find a component that can (an ADC), and iterate from there (“the ADC’s input dynamic range is limited”). This is one of my favorite aspects of hardware design, and I hope it intrigues you, too!